Up to now, the ‘Protect Your Microsoft 365 Data in the Age of AI’ series consists of the following posts:

- Protect your Microsoft 365 data in the age of AI: Introduction

- Protect your Microsoft 365 data in the age of AI: Prerequisites

- Protect your Microsoft 365 data in the age of AI: Gaining Insight

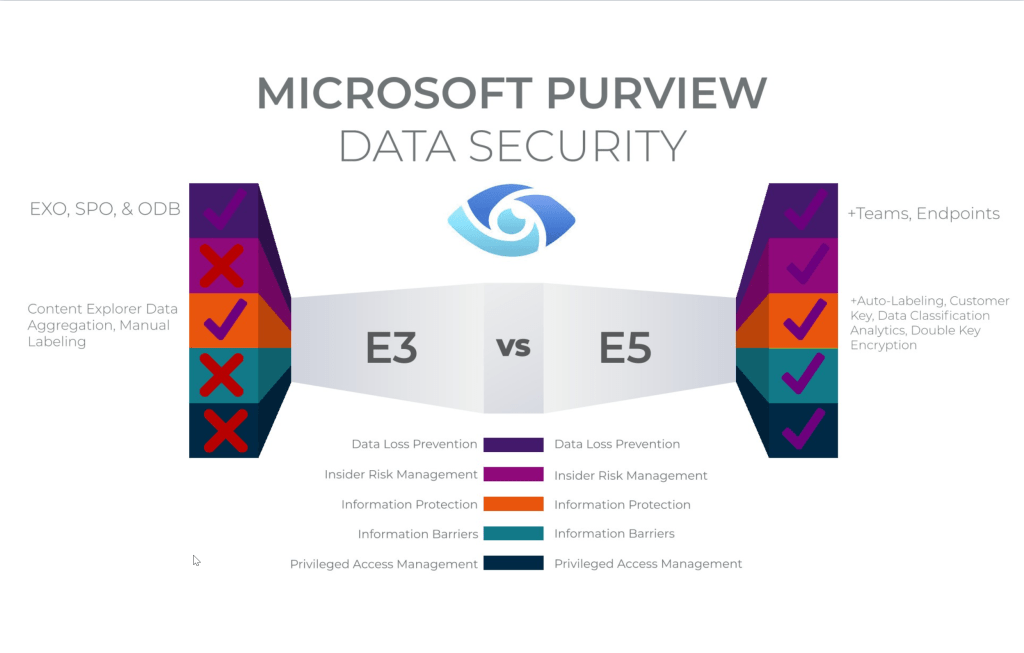

- Protect your Microsoft 365 data in the age of AI: Licensing

- Protect your Microsoft 365 data in the age of AI: Prohibit labeled info to be used by M365 Copilot (This post)

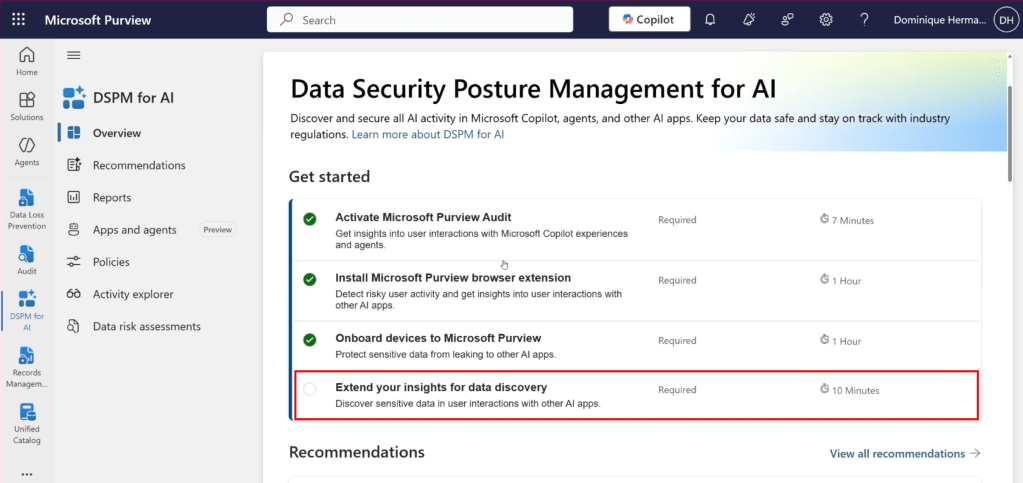

I previously wrote about using DLP policies, labeling and removal of the EXTRACT permission from your label to prevent Microsoft 365 Copilot from looking into your sensitive information. However, those posts are a couple of months old and in Microsoft 365 land, things move fast. The Microsoft 365 Copilot policy location is out of preview so let’s take a fresh new look at our options to prohibit labeled sensitive information to be used by Microsoft 365 Copilot!

Please note that the policy is now (12/11/2025) split into two features:

- Restrict M365 Copilot and Copilot Chat processing sensitive files and emails. – This feature is based on sensitivity labels, is currently generally available (GA), and is the one discussed in this article.

- Restrict Microsoft 365 Copilot and Copilot Chat from processing sensitive prompts. – This feature is based on Sensitive Info Types (SIT’s), is currently in preview and will be discussed in a future article when it hits GA.

Coverage

According to Microsoft Learn, the Data Loss Prevention (DLP) policy we can utilize to prevent Microsoft 365 Copilot from looking into our labeled sensitive information now supports “Specific content that Copilot processes across various experiences.”

- Microsoft 365 Chat supports:

- File items, which are stored and items that are actively open.

- Emails sent on or after January 1, 2025.

- Calendar invites are not supported. Local files are not supported.

- DLP for Copilot in Microsoft 365 apps such as Word, Excel, and PowerPoint support files, but not emails.

However, the following note should be taken into account:

When a file is open in Word, Excel, or PowerPoint and has a sensitivity label for which DLP policy is configured to prevent processing by Microsoft 365 Copilot, the skills in these apps are disabled. Certain experiences that don’t reference file content or that aren’t using any large language models aren’t currently blocked on the user experience.

Copilot can use a skill that corresponds to different tasks. Examples are:

- Summarize actions in a meeting

- Suggest edits to a file

- Summarize a piece of text in a document

- etc

So, to sum this up. Skills like the ones above can be blocked if they reference file content or make use of a large language model. Let’s review this after we configure the policy.

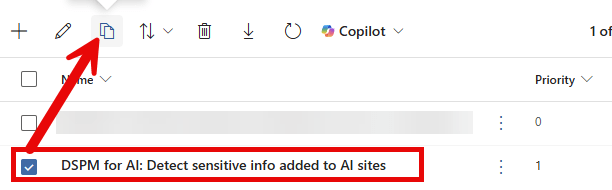

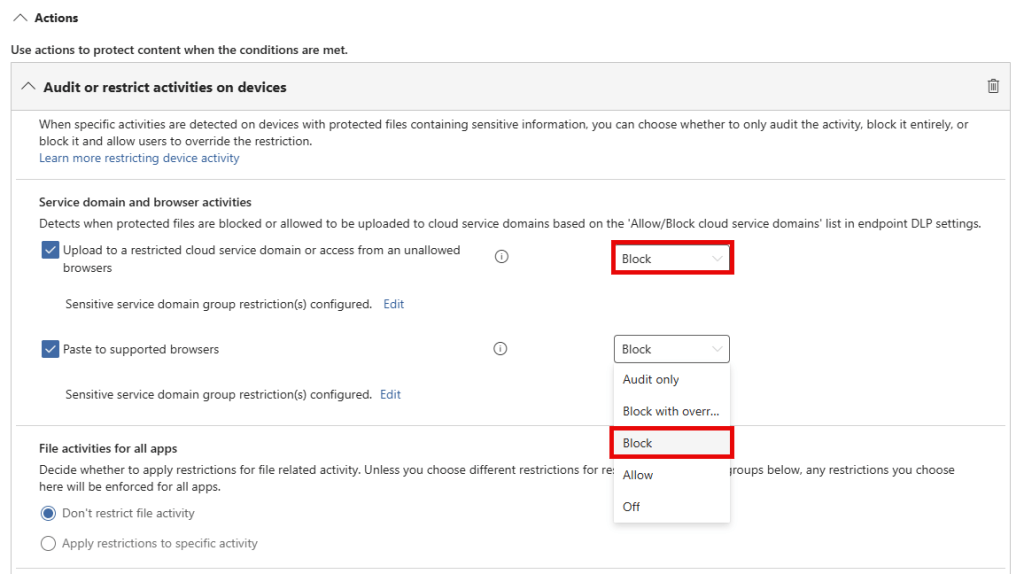

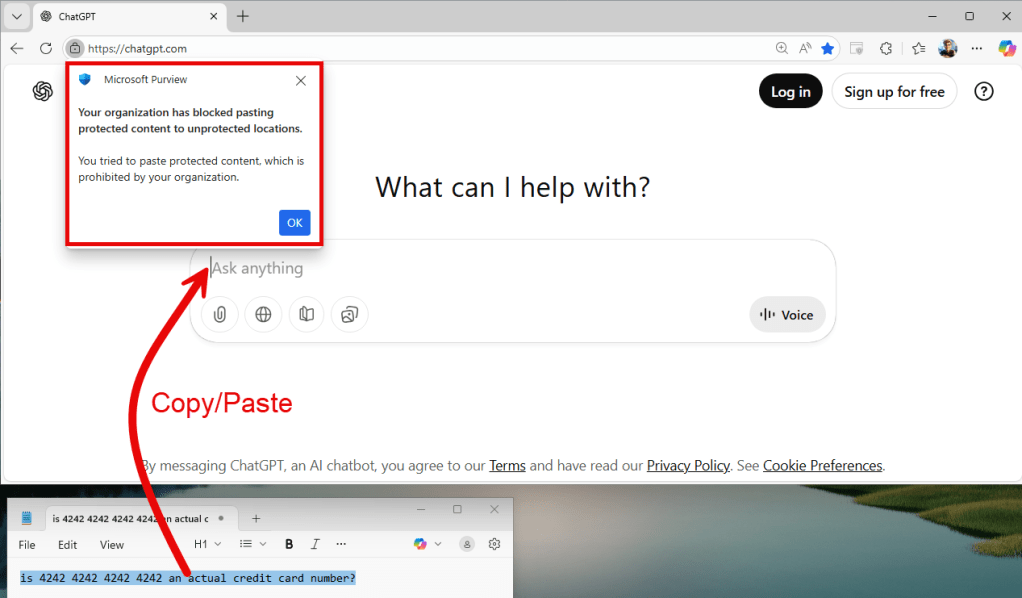

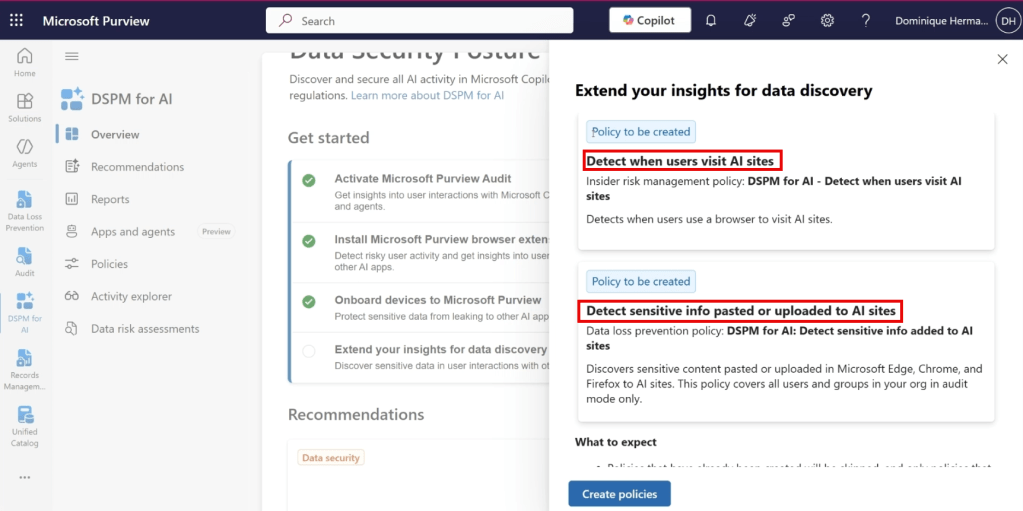

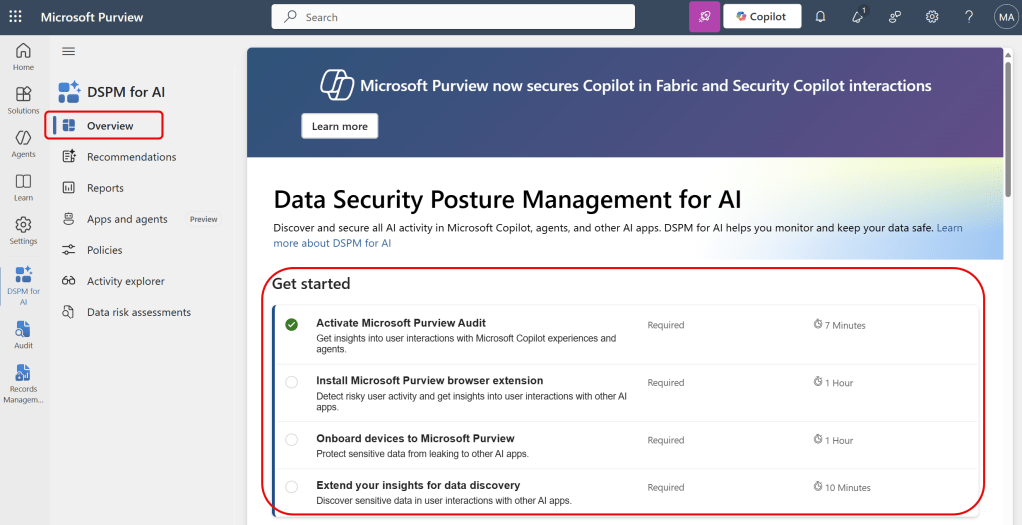

Policy Configuration

For configuration of the Microsoft 365 Copilot DLP policy, please refer to my previous article on the matter, section ‘Configuration of the M365 Copilot DLP Policy’. What you need to know is that a Data Loss Prevention Policy scoped to the ‘Microsoft 365 Copilot and Copilot Chat’ location can be used to prevent M365 Copilot and Copilot Chat from using information in files labeled with a sensitivity label specified in your policy. Only the following properties have changed in the configuration since my previous article:

Continue reading “Protect your Microsoft 365 data in the age of AI: Prohibit labeled info to be used by M365 Copilot” →